Fast, accurate Text-to-SQL agent for enterprise data warehouses

1000+

total columns handled

18.57s

P95 end-to-end latency

20%

accuracy gain vs SaaS

Background

The client was building a finance-first agentic platform to help financial experts in enterprises manage finances in one place. Most of their mission-critical data, like financial records, transactions, and inventory metrics, lived in structured databases, rendering it inaccessible to financial experts who lacked coding skills.

The client initially experimented with a Text-to-SQL agent built on the Numberstation API (now part of Alation). While the approach showed early promise, it faced challenges around accuracy and latency, limiting its suitability for high-stakes use cases.

To address these constraints, the client partnered with our team to design a custom, high-performance Text-to-SQL agent. The new system was built to handle complex financial queries, adapt to diverse database schemas, and integrate seamlessly with enterprise software such as Oracle NetSuite as well as different SQL dialects including Postgres and Snowflake.

Technical Challenges

The project's complexity stemmed from three core requirements:

-

Massive Schema Navigation: Enterprise datastores often contained thousands of columns. The agent's critical first step was to intelligently select the precise schema information relevant to the query, as any failure here would cascade and compromise the LLM's accuracy.

-

True Generalizability: The solution demanded a flexible, generalist architecture. It could not be a single-use tool but an adaptable agent capable of integrating with diverse database dialects like Oracle, Postgres, and Snowflake.

-

Deep Domain Adaptation: To be effective, the agent had to learn and apply the language of the business. This included understanding complex, domain-specific financial terminology and ingesting proprietary, business-specific formulas.

Solution Principles

To address these challenges, the solution was designed with these key principles in mind:

- Accuracy: Ensure efficient candidate selection and accurate SQL generation.

- Handling Large Schemas: Effectively manage and query databases with extensive table structures.

- Flexibility: Adapt automatically to new datastores, business-specific terminologies, and custom metrics.

- Continuous Learning: Constantly learn and improve from user and admin feedback.

Approach

We began by assembling a dataset of questions paired with correct SQL queries. This dataset spanned across different business domains, providing a robust foundation for evaluation, a critical step for successful agent development.

The Importance of Context

Context is the single most important factor in Text-to-SQL accuracy.

For an LLM to successfully translate a natural language query, it requires more than just the query itself; it must also receive a comprehensive textual description of the database. This crucial context includes table names, column definitions, functional descriptions, and other relevant metadata.

We evaluated several methods for providing this schema information, including the use of Data Definition Language (DDL), M-Schema, and the incorporation of few-shot examples. Our findings consistently indicate that model performance improves significantly as the quality and completeness of the provided context increase.

Collections

Our design incorporates the concept of a "collection," a curated set of tables and SQL samples specifically designed to address queries within specific business domains.

The primary function of these collections is to narrow the operational focus of the LLM. This targeted approach significantly enhances the relevance and accuracy of the schema retrieval.

The system was designed to allow both business administrators and domain experts to create and manage collections. These responsibilities include:

- Pruning non-essential columns.

- Enriching metadata descriptions for clarity.

- Modifying SQL expressions.

- Adding feedback.

- Defining domain-centric metrics and knowledge.

Collection Router

Users can either manually select a specific data collection or perform a "global search." When no collection is manually specified, the incoming prompt is first processed by the Collection Router. Router reasons the user's question and automatically map it to the one or more collections most relevant to the query.

Metrics and Feedback

A simple query translator wasn't enough; the agent needed to be a true financial expert. To achieve this, we systematically added business-specific metrics and formulas into its knowledge base. This allows the system to correctly understand and answer questions like "What is our current DSO?" by knowing the precise calculation for Days Sales Outstanding.

This knowledge base is constantly improved through a continuous feedback loop. When users or admins identify an error in a calculation, that feedback is used to refine the agent's logic. This ensures the platform constantly evolves, sharpens its accuracy on complex business logic, and improves overall system performance.

Code Generation

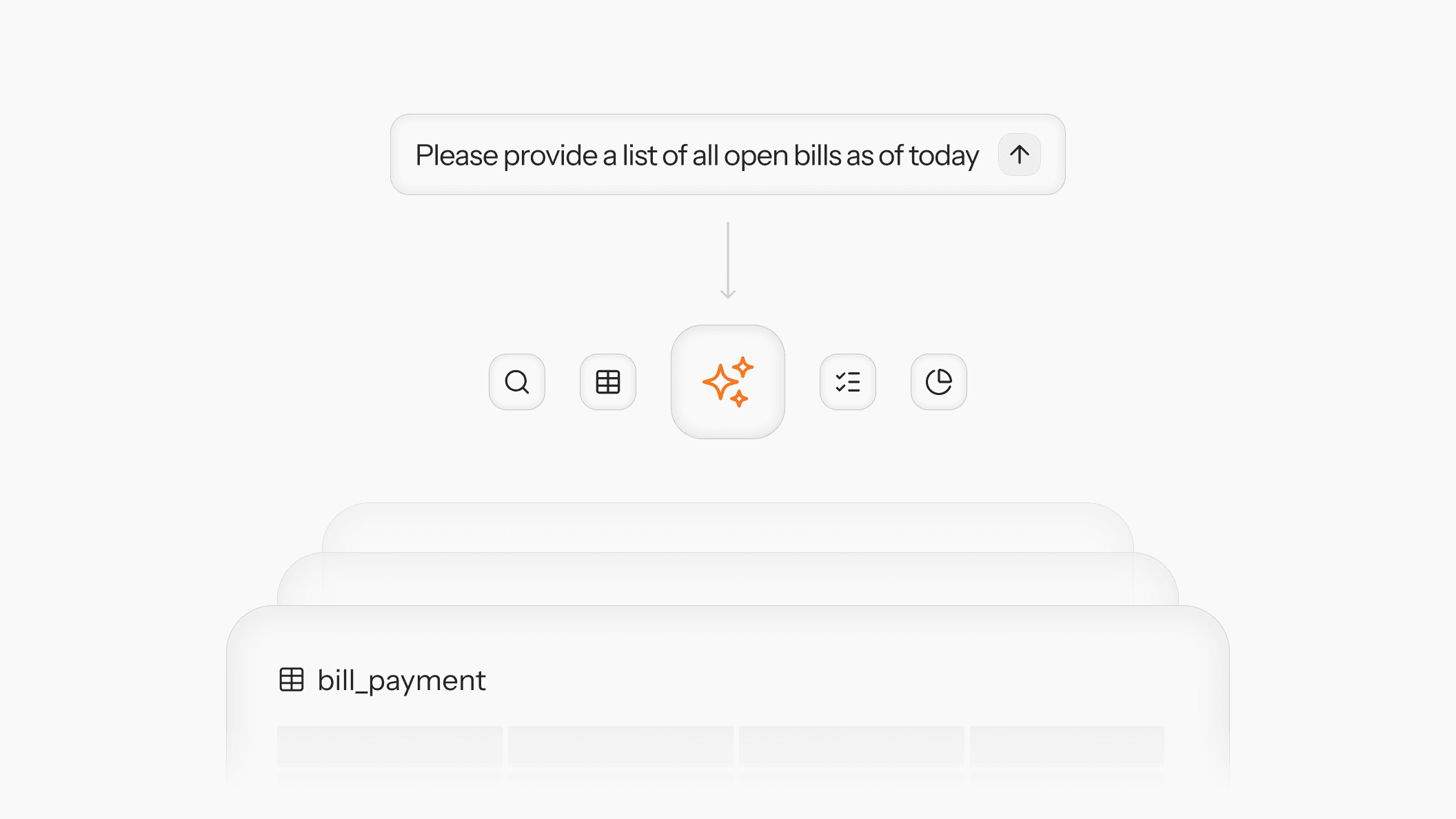

The assembled context is then passed to the core execution agent, which is responsible for planning, generating, and executing complex queries.

Its agentic nature is critical for handling financial analysis, which often requires sequential, multi-query execution where subsequent steps are dependent on prior results. This approach also empowers the agent to dynamically decide on requirements, such as whether to generate charts (using Vega descriptions) or apply other customizations based on the session memory.

Finally, the agent synthesizes the retrieved data into a concise summary, highlighting key insights that directly answer the user's query.

Outcome

Our rapid development cycle enabled the client to ship production-ready features within weeks, a critical advantage in the fast-moving AI landscape.

Through targeted optimizations in schema selection and query planning, our solution achieved substantial latency improvements, reducing p95 query latency to 18.56 seconds and median (p50) latency to just 10.99 seconds for end-to-end generation—including summary and visual representations, where applicable. This is significantly faster than previous SaaS benchmarks.

Beating Proprietary Tools

Clients reported immediate and noticeable improvements in both accuracy and responsiveness compared to the rigid, proprietary SaaS offerings they had been using.

Crucially, our solution replaced their unpredictable, per-user, usage-based pricing with a highly scalable and predictable cost structure. The client gained superior performance for a far better long-term ROI.

To ensure easy management and scalability, we delivered a production-ready Admin UI and supporting APIs, empowering the client to create and manage data collections across multiple datastores.

Is your organization struggling with slow queries, inaccurate insights, or the rigid, costly nature of off-the-shelf analytics tools? Reach out to us today.